Where I work we use TFS and MTM, and there a number of pain points around it, namely it's slow, and can be difficult to work with if you're not used to the UI, they are 2 things that unfortunately for the time being I can't help with, however, there was one grievance in that passing a test in MTM doesn't update the Test Case in TFS.

I can understand why this is, as an Acceptance Test in TFS and a Test in MTM are 2 different things, in that an Acceptance Test in TFS can be run on multiple configurations inside MTM, so why would a passed test in MTM update the Test in TFS?

This meant that the testers would have to export the tests in Excel and performa mass update to pass the TFS test cases, which was a bit of a pain and unnecessary.

I did some research, and found other people had the same problem, so thought how it would be great if we could use the TFS API to update all the test cases against the PBI to "Passed" just by inputting the PBI number.

The hardest thing about this was coming up with the query that would bring back the library of Acceptance Tests, but thankfully I could create the query in TFS and get the actual code for the query and put that into the program.

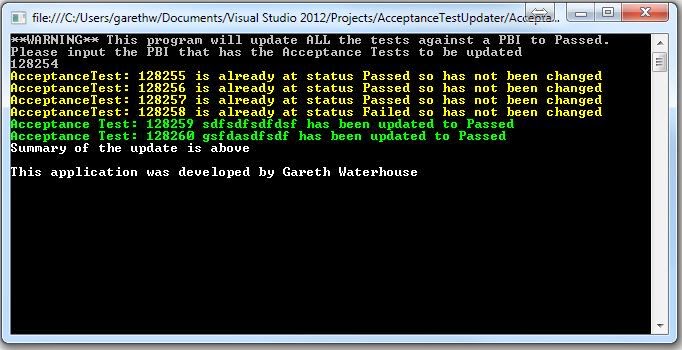

I figured the simplest way would be to have a console application that the tester writes what pbi they wish to update and away it goes and does the magic, and writes each test that is updated to the window and then notes the success at the end.

I have sent this round to the teams and it's proving very useful, I've added in some exceptions that if a test is already at failed then don't update it, as that can be a manual process, and I wouldn't want this bulk update to change test cases that it's not meant to.

I would share the source code, however I think it's very bespoke to the set up of the work here, so wouldn't necessarily be useful. I just thought it would be useful to let people know that it can be done, and I understand the reasons why it's not in there by default.

I can understand why this is, as an Acceptance Test in TFS and a Test in MTM are 2 different things, in that an Acceptance Test in TFS can be run on multiple configurations inside MTM, so why would a passed test in MTM update the Test in TFS?

This meant that the testers would have to export the tests in Excel and performa mass update to pass the TFS test cases, which was a bit of a pain and unnecessary.

I did some research, and found other people had the same problem, so thought how it would be great if we could use the TFS API to update all the test cases against the PBI to "Passed" just by inputting the PBI number.

The hardest thing about this was coming up with the query that would bring back the library of Acceptance Tests, but thankfully I could create the query in TFS and get the actual code for the query and put that into the program.

I figured the simplest way would be to have a console application that the tester writes what pbi they wish to update and away it goes and does the magic, and writes each test that is updated to the window and then notes the success at the end.

I have sent this round to the teams and it's proving very useful, I've added in some exceptions that if a test is already at failed then don't update it, as that can be a manual process, and I wouldn't want this bulk update to change test cases that it's not meant to.

I would share the source code, however I think it's very bespoke to the set up of the work here, so wouldn't necessarily be useful. I just thought it would be useful to let people know that it can be done, and I understand the reasons why it's not in there by default.

Comments

Post a Comment